←

Illia [ilˈiə] Kuzminov

CX/UX researcher.

4+ years into Research and DesignOps. Specializing in the end-to-end cycle of research, integrating data-driven approaches into product strategies. Expertise spans project and product management, art direction, and data analysis.

Automated Service for Ordering Electronic Modules: Optimizing UX into B2B SaaS

/CONTEXT

As a UX Research assistant at the company, I was tasked with enhancing the user experience for a unique B2B SaaS solution that automated the ordering of electronic modules. This project was particularly challenging due to the niche market and the difficulty in recruiting relevant participants for usability studies.

/TEAM

Account Manager, Domain Advisor, PM, Lead UX Researcher, UX Designer, UX Researcher Assistant

/TOOLS

Figma, Excel, Google Forms, Google Analytics

/RESULTS

— Enhanced task completion rates during usability tests by 40%.

— Reduced error rates in user tasks by 25%.

— Boosted overall user satisfaction scores, reflected in post-study surveys.

— Accelerated the development process by optimizing resource allocation.

— Improved user engagement by 30%, leading to increased usage of the service.

— Decreased onboarding time for new users by 20%, making the service more accessible.

— Increased accuracy of BOM file uploads by 50%, significantly reducing manual corrections.

/DELIVERED ARTIFACTS

— Data analysis reports, categorized screen recordings

— Usability Test plans and summary reports

— Design specifications

— Benchmarking reports

/RESEARCH PROCESS

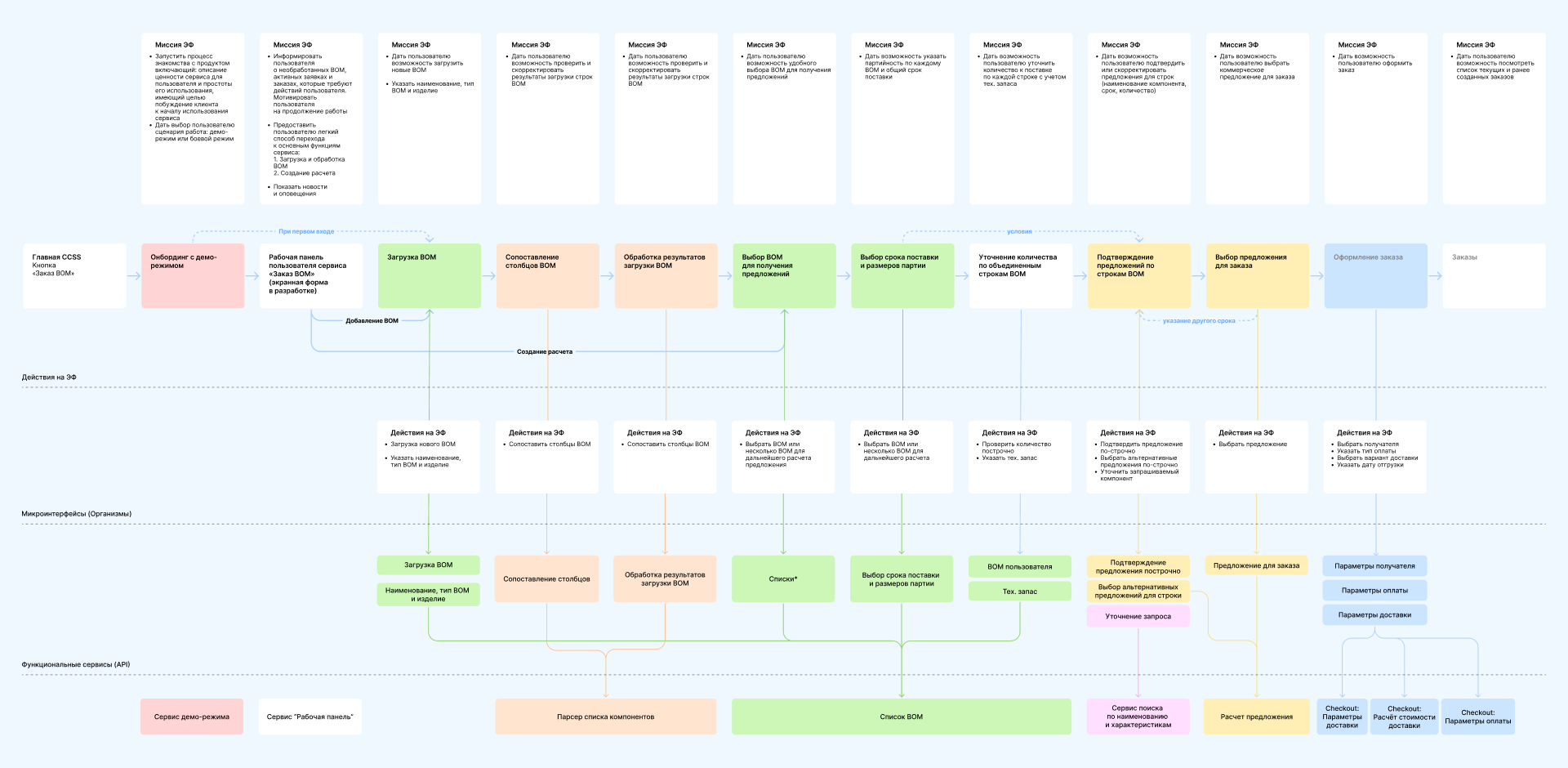

My work started with inclusion in the project during the user scenarios development phase, my responsibilities included assisting the lead UX analyst and business analyst. We tried to make it as linear as possible, as order formation requires a lot of data, including PCB parameters, BOM file, and design documentation. The linear script relieved users of a large amount of information and allowed them to load data step by step. After several iterations, the script looked like this:

After the script was agreed, we started designing. We started with the most complicated layout - the fifth step in order placement. Many processes were tied to this layout, and it influenced the other stages of the scenario. As the prototypes became ready, we passed them straight to design to speed up the development process.

At one point we realized that the goal-oriented design approach needed to be validated by research with testing the interface on real users. Since the fifth step was the most difficult and the most hypotheses were associated with it, it was decided to start testing on it. Our research were focused on the process of uploading BOM (Bill of Materials) files. BOM files, essential for listing materials and components needed for assembly, typically come in various formats like Excel, PDF, DOC, or TXT. To streamline the user experience, we implemented a parser that recognizes and uploads information from these files directly into the system, thereby eliminating the need for manual data entry.

However, designing the drag-and-drop area for file upload and ensuring accurate data parsing presented several challenges. We had to:

- Define the visual and functional aspects of the drag-and-drop area, making it reusable across different parts of the service.

- Create concise and clear instructional text for users regarding the types and formats of files they could upload.

- Design all states of the upload area, including hover, drag, upload progress, and error scenarios.

To address these challenges, we analyzed various BOM files provided by clients, identifying common errors and devising solutions. We categorized errors into those that could be fixed within the service and those requiring user intervention. For the latter, we developed error messages to guide users in correcting their files.

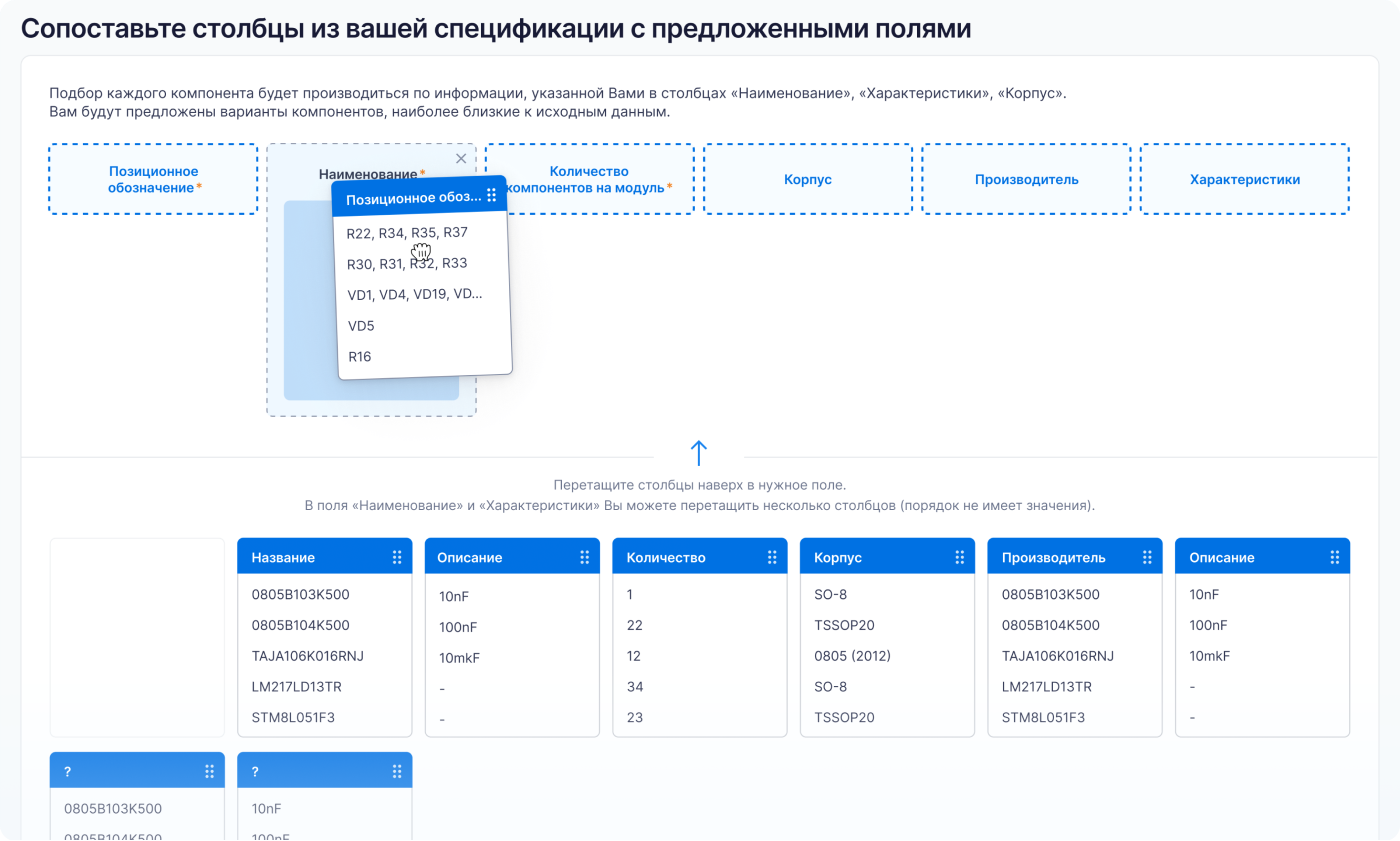

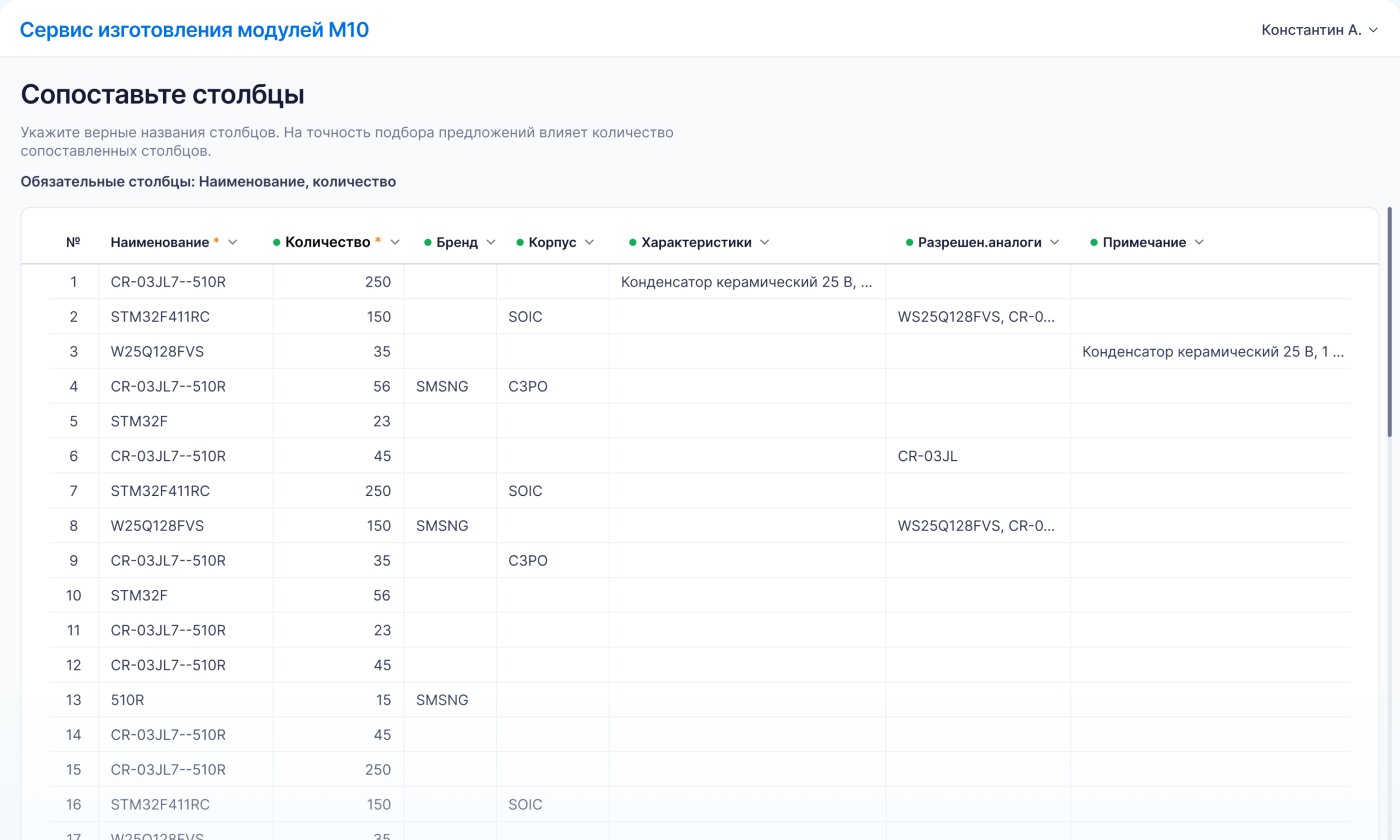

The interface design included two areas: one for system columns and one for columns from the uploaded document. Users had to match these columns for accurate component recognition. Post-matching, the system checked for errors or missing values, prompting users to correct issues before proceeding.

This thorough analysis and iterative design process enabled us to develop a robust solution that significantly improved the accuracy and efficiency of BOM file uploads.

One significant challenge we faced was ensuring the accurate upload and parsing of BOM (Bill of Materials) files. These files, essential for listing materials and components needed for assembly, came in various formats like Excel, PDF, DOC, or TXT. To streamline the user experience, we implemented a parser that recognizes and uploads information from these files directly into the system, thereby eliminating the need for manual data entry.

During the discovery phase, I immersed myself in the domain by engaging with industry professionals to understand their specific needs and challenges. This foundational understanding was crucial for recruiting suitable participants for our study. I devised a targeted recruitment strategy and successfully enlisted professionals with the necessary expertise.

With a pre-production version of our service ready, I conducted a series of usability studies. These sessions were meticulously designed to capture comprehensive data, including screen activity, facial expressions, and hand movements. I facilitated ongoing usability testing and incorporated post-study questions, such as the Single Ease Question (SEQ) and Usability Metric for User Experience (UMUX).

During testing, we discovered that users often named columns inconsistently, leading to errors in data parsing. For instance, one user labeled a column as "Part Number" while another used "Component ID". This inconsistency caused parsing errors and user frustration. To address this, I proposed and tested a column-matching interface where users could map their columns to system-recognized names. Analyzing the collected data, I synthesized the findings into a detailed report. The report included key artifacts such as quotes, recorded highlight moments, and participant responses. I presented actionable recommendations to improve the user flow and interface, emphasizing changes that were impactful yet feasible to implement.

Working closely with the design team, we iteratively refined the visual language and user flow. Key insights from the usability studies were shared with the PO and PM, leading to prioritized changes that were both impactful and feasible. Our collaborative efforts culminated in a final design that significantly improved user satisfaction and engagement. Usability testing was conducted with a similar sample to the discovery phase, including churned, active, and potential users. After launching M10, company invited a subset of their clients to test the interfaces. Within a few weeks, our team gathered statistics and user feedback, leading to a list of improvements.

One significant change was in the column-matching interface. Initially, the interface was:

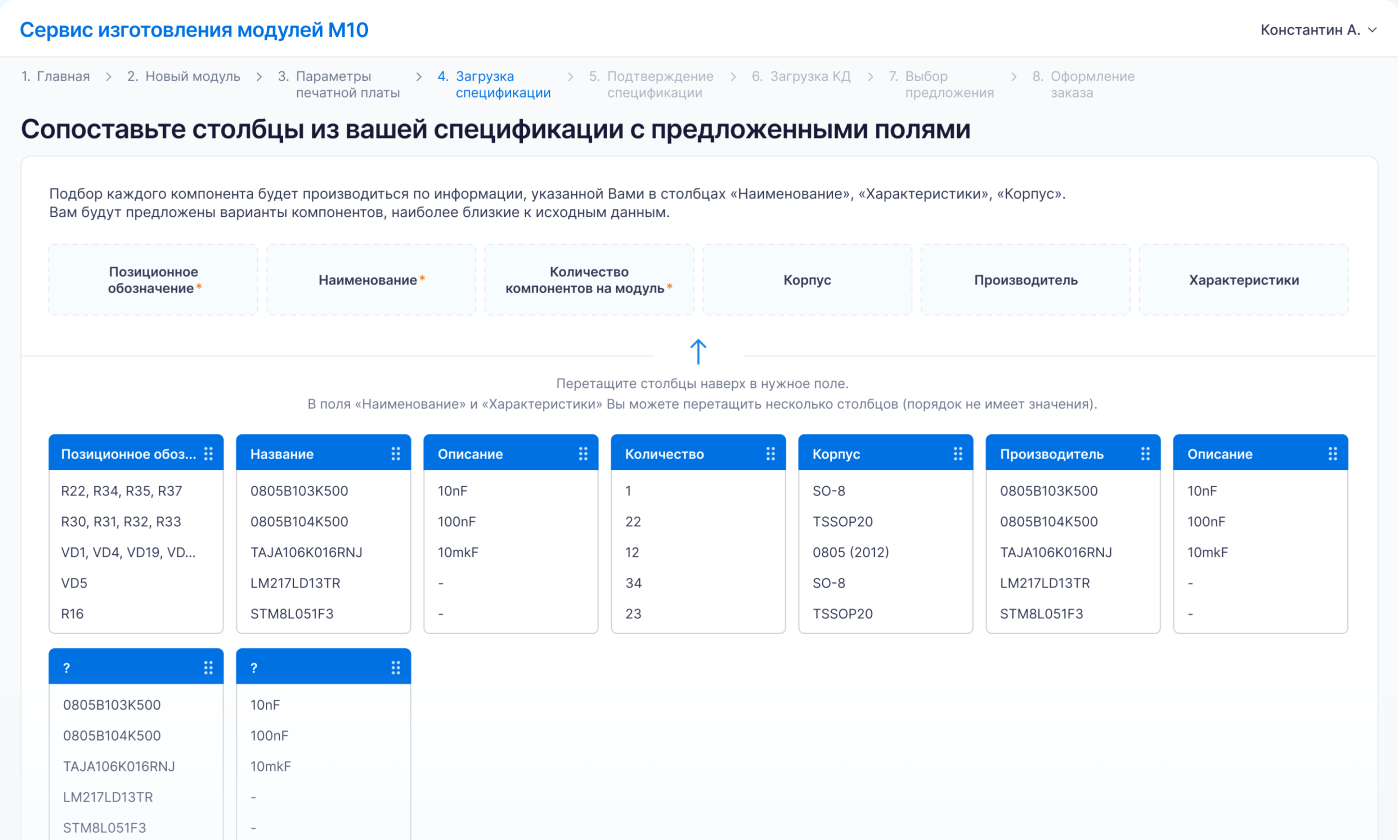

However, users found this approach inconvenient as they were accustomed to working with Excel. Consequently, we redesigned it based on familiar tools:

The final version required users to go through each column and select the appropriate name from a dropdown list. This included mandatory columns (name, quantity) and optional ones (brand, characteristics). Without filling the mandatory columns, users couldn't proceed, while optional columns helped refine the search.

After implementing this solution, usability tests showed a 40% increase in task completion rates and a 25% reduction in errors. Users appreciated the flexibility and clarity of the new interface, as reflected in post-study surveys where satisfaction scores improved significantly.

This project underscored the importance of iterative testing and user feedback in refining complex interfaces. It also highlighted the value of cross-functional collaboration in achieving user-centered design solutions. Moving forward, I plan to apply these lessons to future projects, ensuring that user needs and behaviors drive design decisions.

/RESULTS

— Enhanced task completion rates during usability tests by 40%.

— Reduced error rates in user tasks by 25%.

— Boosted overall user satisfaction scores, reflected in post-study surveys.

— Accelerated the development process by optimizing resource allocation.

— Improved user engagement by 30%, leading to increased usage of the service.

— Decreased onboarding time for new users by 20%, making the service more accessible.

— Increased accuracy of BOM file uploads by 50%, significantly reducing manual corrections.